These days, it’s difficult to be online without seeing at least one news article about ChatGPT. Naturally, the internet has a lot to say, and the tenor of opinions about it (and about artificial intelligence in general) seems to fall somewhere between Marc Andreesen’s upbeat techno-capitalism and Noam Chomsky’s critical skepticism, with the remainder being the believers of a future AI-induced apocalypse. In academia, digital arts and humanities are on the rise; realistically, every funding-hungry scholar and administrator is eyeing technology as a way of staying relevant (and open for business) — including me.

Of course, the mad dash for research funding isn’t the only reason I’ve begun incorporating digital technology in my work. The fact that life in the Global North is increasingly mediated by devices has changed the way we experience the world and create meaning within it, so I am interested in thinking about what gets lost, but also what gets added, when digital technologies are enmeshed with human existence. That exploration is also textured by my concerns about the environmental and neo-colonial violence that sustains modern life, as well as the need for alternative forms of consciousness to circumvent its brutal, totalizing presence. So, after encountering ChatGPT earlier this year, I couldn’t help but see its critical potential — not as a lens for justifying the importance of humanity but as one for challenging the impulse for that justification. It occurred to me that framing AI chatbots as participants in artistic practice might prove interesting, even fruitful, to my current research on affect in human-machine interactions.

Frankly, I am neither interested nor qualified to write extensively about ChatGPT or how artists can incorporate AI into their work. Instead, I have chosen to focus on ChatGPT because of the way it has been sensationalized and made so accessible to the public, not to mention the rhetoric of social empowerment adopted by its parent company OpenAI. Moreover, OpenAI’s emphasis on making ChatGPT as approachable and servile as possible to humans necessitates delving deeper into common presumptions of anthropocentrism vis-à-vis AI machines.

This article positions ChatGPT as a heuristic for exploring facets of our perception of AI and how to reimagine them more radically; in other words, I want to figure out how particular ways of knowing something alter our relationship with it. As a result, I discuss two epistemological orientations toward ChatGPT: 1) an anthropocentric, utilitarian one that currently dominates public discussions of AI, and 2) a circumventive, playful one that highlights non-productive qualities of difference, fragmentation, waste, and absence. The use of “orientation” implies that these two perspectives do not represent static, self-contained truths. Rather, they emerge from specific ways of positioning oneself; as queer theorist Sara Ahmed writes, orientation entails how “we come to find our way in a world that acquires new shapes, depending on which way we turn.”[1] Thus, the utilitarian and playful stances are merely two (of many) that, when placed alongside each other, conjure certain tensions surrounding the means and purposes of creativity/creation in a machine-mediated world.

With the former, I discuss the hierarchical existential relationship between humans and machines and the way it inevitably creates an epistemology of utility — what I call a “tool orientation” — around ChatGPT and other AI technologies. My response is an alternative “toy orientation,” an epistemological bricolage that cobbles ideas about play from twentieth- and twenty-first-century philosophers. Casting ChatGPT as a toy allows its behavioral qualities to assume other roles, opening us to a form of relationality not predicated on productivity and utility. This ludic encounter with an AI chatbot enables us to see our present insistence on utilizing bodies as the driver of our existential anxiety. We fear being replaced by machines only because we have become epistemologically oriented toward the swap; we already easily exchange everything, including ourselves, in one click — hassle-free returns guaranteed. In its refusal to be useful, play (whether in language, art, technology, or everyday life) leads us toward lively acts of resistance and reparation, which are urgently needed in a world that sees commodity and existence as one and the same.

Interlude I

In the beginning, God created the heavens and the earth. And God said, “Let us make man in our image, after our likeness: and let them have dominion over the fish of the sea, and over the fowl of the air, and over the cattle, and over all the earth, and over every creeping thing that creepeth upon the earth.” So God created man in his own image, in the image of God created he him. Some undefined amount of time later, man said, “Let us make machines in our image, after our likeness: and let them have dominion over the fish of the sea, and over the fowl of the air, and over the cattle, and over all the earth, and over every creeping thing that creepeth upon the earth.” So man created machines in his own image, in the image of man created he them.

Growing up deeply ensconced in the evangelical church, I was taught very convincingly that “humans were created by God and live according to his purpose.” It’s a theological statement that must be believed to be true, and one that eerily parallels this one: machines were created by humans and work according to their purpose. This second statement seems obvious — the kind that merits a “no shit!” response — a fact that doesn’t require some arbitrary belief to make it true. Or maybe it does.

Oh, what’s the use?

In 1950, six years before the field of artificial intelligence was established, Alan Turing introduced his famous “imitation game,” later called the Turing test.[2] The imitation game is a test that demonstrates how effectively a machine can express intelligent behavior, assessed simply by whether a human evaluator can distinguish between the machine and another human in a blind, text-based conversation. The Turing test was really a philosophical inquiry into the boundaries of human — not machine — intelligence and behavior, or as cognitive scientist Stevan Harnad writes, “Turing’s proposal will turn out to have nothing to do with either observing neural states or introspecting mental states, but only with generating performance capacity (intelligence?) indistinguishable from that of thinkers like us [humans].”[3] Following Turing, applied AI is unabashedly transhumanist in its quest to replicate or imitate human-like intelligence as an extension of (not replacement for) the human, which is apparent in the development of chatbots like ChatGPT.

At its core, ChatGPT (Generative Pre-Trained Transformer) is a large language model (LLM) that runs on transformers, a type of neural network architecture. Transformers use a massive supply of text data, much of which has been scraped from web pages, and perform next-token prediction to generate an output. With next-token generation, the model predicts the highest probable word in an input sequence, based on its analysis of its data supply. What makes ChatGPT different from its predecessors (GPT-3 and 3.5) is that it also uses Reinforcement Learning Human Feedback (RLHF), a system that includes human input in the training process to fine-tune errors and biases and essentially teach the model how to sound more human, or as one writer put it, to “align with human values.”

What has made ChatGPT so revelatory to the public is its seemingly sophisticated grasp of human language — thanks to RLHF — combined with its vast corpus of data. The critics, however, are quick to note that LLMs aren’t thinking, at least not in the way humans do. Media scholar Matteo Pasquinelli insists that AI programs merely engage in “pattern recognition, statistical inference, and weak abduction,” compared to the complex nuances of human cognition.[4] Douglas Hofstadter, cognitive scientist and author of Gödel, Escher, Bach, refers to the “Eliza effect,” named after Joseph Weizenbaum’s 1966 ELIZA chatbot, as the human susceptibility to “impute far more depth to an AI program’s performance than is really there, simply because the program’s output consists of symbols—familiar words or images—that, when humans use them, are rife with meaning.”[5] It’s not so much that chatbots have a sophisticated grasp of language as that their arrangement of symbols is encoded as meaningful to swaths of human users.

Thus, ChatGPT’s imitative intelligence requires us to validate the imitation; its successful performance is contingent upon our recognition of whom it is performing. Hofstadter, in response to a GPT-4-generated essay written supposedly in his style, excoriates advocates of the technology:

I would say that that text, in sharp contrast to what I myself wrote in [Gödel, Escher, Bach]’s 20th-anniversary preface, consists only in generic platitudes and fluffy handwaving. […] Although someone who is unfamiliar with my writing might take this saccharine mixture of pomposity and humility as genuine, to me it is so far from my real voice and so far from GEB’s real story that it is ludicrous.[6]

Here, the machine fails to demonstrate intelligence to Hofstadter because its imitation of him was invalid. In looking for an imitation, though, we no longer are interested in whether machines can think. We are, as Harnard says about Turing’s test, ultimately invested in how closely machines can perform “human” — that is what makes machines intelligent and intelligible to us.

Nevertheless, alternative perspectives of machine intelligence do exist. Scholars like Beatrice Fazi and Luciana Parisi analyze computational behavior to argue for unique forms of what they call “algorithmic thought.”[7] Both Fazi and Parisi’s rejections of human cognition as the baseline for intelligence allow them to pursue a new way of thinking about machines based on how they work, not how they work in relation to humans. Rather than focusing on computational output (i.e., whether AI-generated material matches human-generated material), they are interested in the unique qualities of algorithmic thought as a kind of posthuman neurodivergence.

One of the obstacles to pursuing the algorithmic thought approach is the question of applicability. We are hardly content with allowing artificial intelligence to remain purely theoretical; that would be too academic, too indulgent. The anthropocentrism of imitative AI goes beyond the need for humans to recognize themselves in machine behavior to justify intelligence. Turing states at the end of his famous imitation game paper, “We may hope that machines will eventually compete with men in all purely intellectual fields.”[8] Why? In all this talk of intelligence, there seems something more existential at stake: humans use machines as a means of species prolongation. As such, the most effective way for machines to do this is if they are designed to serve our priorities and express our values. They are most useful to us, not when we think of them as autonomous (because of the potential for deviance), but as para-humans.

This epistemological impulse brings to mind Marshall McLuhan’s technological determinism, particularly his perspective of technology as an extension of the human mind and body, which allows it to inform and change human societies. He writes that one of the underlying themes in his book Understanding Media is “all technologies are extensions of our physical and nervous systems to increase power and speed”[9]; the lightbulb becomes an extension of the eye, the car an extension of the foot, and the computer an extension of the brain. My point in bringing up McLuhan is not to critique his theory but to point out a belief that he (and many of us) takes for granted: technologies are fundamentally designed to be functional. In other words, they are always cast as tools — vehicles for human survival.

OpenAI’s website has an entire page titled “Safety,” emblazoned with this statement from the company’s CTO: “AI systems are becoming a part of everyday life. The key is to ensure that these machines are aligned with human intentions and values.” ChatGPT for iOS is described as one of OpenAI’s “useful tools that empower people.” The company mission page is peppered with phrases like “developing safe and beneficial AI” and “maximize the social and economic benefits of AI technology.” ChatGPT has become a tool of mostly data-driven labor — writing emails, finding recipes, giving prosaic advice, or sourcing information — but increasingly, emotional and mental health support as well. It’s equal parts parent, butler, secretary, career coach, therapist, and encyclopedia.

The goal of ChatGPT is to liberate us from all those menial, unnecessary, and burdensome information tasks that we’re tired of doing, ostensibly to make us happier, more productive, free to pursue more pleasurable interests, or all of the above. (Though, personally, I’d prefer we structurally address the proliferation of bullshit work that makes AI tools like ChatGPT so appealing). And ChatGPT is only one of the many AI tools touted for being incredibly useful. AI’s accelerated computational speed enables technologies that supposedly improve the quality of human life (e.g., more efficient mining of battery metals, more affordable education) and expand human knowledge (e.g., transspecies communication). When I see the astounding technologies we’ve developed with AI, I can’t help but recall this observation by Jean Baudrillard: “Man dreams with all his might of inventing a machine which is superior to himself, while at the same time he cannot conceive of not remaining master of his creations.”[10]

Of course, utility has a dark side. There is the exploitative potential of useful AI, facilitating the ease with which corporations can outsource compensated human labor to uncompensated machine labor (e.g., the ongoing WGA and SAG-AFTRA strikes). One could say that the solution to all these problems lies in finding the most humane uses of AI, but I would respond that that ethical discussion relies entirely on an (anthropocentric) utilitarian belief, which is only one philosophical perspective (of many). “Safety,” as delineated by OpenAI, seems to refer only to safe design and use of the tools themselves. Safe tools often intend safety only for the user; they do not necessarily protect the objects on which the tools are being used.

As a result, it’s easy to think of — to be epistemologically oriented toward — AI as a tool because we are intensely concerned with keeping ourselves alive, with keeping the power that comes from wielding powerful tools. Even something frivolous like ChatGPT, which is really a machine that has been trained to “speak” human, must conform to our impulse to turn what it can do into what it can do for us, whether that be taking on administrative drudgery or offering emotional companionship. Subsequently, viewing ChatGPT as a tool affects its training, which relies on specific types of datasets and feedback mechanisms that maximize anthropocentric utility. For one, ChatGPT needs to be helpful, meaning it achieves our objectives, and it needs to produce accurate information (false information is generally considered useless). It should not be harmful, in that it should not generate or encourage behaviors deemed malicious or illicit in human society. In other words, it should comply with Asimov’s Three Laws of Robotics.[11]

Isaac Asimov’s Three Laws of Robotics: 1. A robot may not injure a human being or, though inaction, allow a human to come to harm 2. A robot must obey orders given it by human beings except where such orders would conflict with the First Law 3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law

Ultimately, I am critical of the utilitarian approach to AI because it valorizes the human desire to endlessly extend ourselves more powerfully and more quickly, without stopping to reconsider whether and why we are doing so. The tendency to view objects as a means-to-an-end exacerbates the kind of alienated consumption that pervades modern human life, driving the imperatives for how we exist, think, feel, and create.

What happens if ChatGPT isn’t trained to be helpful, accurate, or harmless? It would cease to be useful, at least in a productive way, and therefore, no longer a (human) tool. Instead, it becomes more like a toy — something with which we can play and test its limits, and something that cannot take on commodity value because it “must put back into play within the game itself.”[12] The toy orientation follows what Baudrillard calls the circular “symbolic social relation” in which there is “the uninterrupted cycle of giving and receiving,”[13] rather than the linear, finite transactions that define capitalistic use and exchange. Moreover, I should note that the toy orientation doesn’t establish a static ontological identity, and it isn’t used for entertainment, since that would render the object as a tool for pleasure.

“I am profoundly troubled by today’s large language models. I find them repellent and threatening to humanity, partly because they are inundating the world with fakery.”[14]

Douglas Hofstadter

Instead, the toy orientation is an epistemological relation characterized by the absence of utility and the presence of diversion. To think of ChatGPT as a toy means engaging with it fictitiously, subversively, or exploratorily, focusing less on its functional applications and more on its affective, animating potential. It means entering the world of games, of fiction, of bits and satire, and of freeplay. It means relinquishing one’s judgment of the artificial as a distortion of the real and viewing truth as normative rather than absolute knowledge. The toy creates an epistemology of fakery in which knowledge is not weighed by its universal truthiness but by its localized generation, or acts of play.

Interlude II

This summer, I’ve spent a lot of time watching my cats play — something they do frequently since there’s not much else to see from my ninth-floor apartment in Toronto. Even though we share the same space, their worlding seems much more dynamic than mine: they bring me stuffed animals and old harp strings, pounce on garlic husks, and chase after moving legs; I sit in front of the computer, stationary for most of the day, typing out this article. The fact that my cats are not actually hunting (at least for food) renders their actions more akin to play than anything functional. I like that Hans-Georg Gadamer calls animals’ strong propensity for play “the expression of superabundant life and movement,"[15] as if they are bursting with a vitality that humans have somehow lost. As I watch one of my cats stalking a tuft of lint, I wonder whether she believes she is hunting or playing. I wonder what part of herself she hopes to nourish.

Playing with Play

As a child, I was always elated when my classmates elected to play prisonball at recess. A variant of dodgeball, prisonball adds the caveat that if one is hit by a member of the opposing team, they are then captured and placed behind the opposition. While “in prison,” one can be freed by receiving a pass from an uncaptured teammate. The team that captures all members of its opposition wins. What I loved about this game was the thrill of evasion: the feeling of the ball whizzing past, barely touching my skin. Freedom was contingent on my body’s ability to contort itself around the ball, to bend in ways that the opposition couldn’t anticipate. Small and unnoticeable (and really bad at throwing), I often found myself the last player standing, watching my teammates hollering and waving at me to pass them the ball. Knowing I couldn’t save them, all I could do was prolong capture by dancing our last rites, hoping that the school bell would ring before the game could end.

I bring up this memory to introduce some salient theoretical characteristics of play: 1) absence of stable structures, 2) evasion/prolongation of capture, and 3) lively materiality. First, Jacques Derrida calls this absence freeplay. In “Structure, Sign, and Play in the Discourse of Human Sciences,” Derrida suggests that playing within a centralized structure does not have deconstructive power; it only has oppositional power that replaces one center with another. His alternative to structured play is freeplay, which he describes as “the disruption of presence” and “the play of absence and presence.”[16] In a confusing twist, to freeplay is also to not acknowledge freeplay as a center. Freeplay is a perpetual absence in which absence cannot be cast as an antidote to presence. Instead, freeplay is most akin to fugitivity: being grounded in taking flight but taking flight to escape being grounded.

One expression of freeplay is the amorality of games, not in the sense that games are immoral, but that game-action escapes a human moral structure; in play, “all that counts is the event of the game itself and the advent of shared rules.”[17] The point is we have to abandon moral law for the sake of the game; as such, theft, betrayal, and death lose their “real” significance but are able to take on new meanings in gameplay. Thus, leaving the realm of the real world allows us to consider, for a moment, the possibility of other realms of existence. We might consider that the question of survival doesn’t always lead us to a totalizing system of resource accumulation/expenditure (that defines living in the real world), but that survival can occur through localized acts of escape, such as in the fugitivity of prisonball, or perhaps, a game of chess against a machine.

In “Deep Blue, or the Computer’s Melancholia,” Jean Baudrillard writes about Gary Kasparov’s triumph over the chess computer Deep Blue in 1996:

This relinquishment [of the human’s own thought] … is the subtle assumption of game-playing. It is here that the human being imposes himself through illusion, decoy, challenge, seduction, and sacrifice. It is this strategy of not going the full hog, of playing within one’s capabilities, that the computer understands least well, since it is condemned to play at the height of its capabilities. This syncopation or ellipsis of presence by which you provoke the emergence of the other—even in the form of the virtual ego of the computer—is the real game-player’s thinking.[18]

Notwithstanding Baudrillard’s premature conviction that humans play better than computers (Deep Blue beat Kasparov in a 1997 re-match), he conjures an intriguing relational condition for play: the calculated absence of self that allows the other to reveal itself. It is strategy often used in hunting, in which the hunter may hide and thus lure their prey into showing itself. In perhaps a more familiar example, ellipting one’s presence drives one of the most common mind games in human relationships: withdrawing is supposed to trigger anxiety in the other person, provoking them into being present. Power plays aside, an important takeaway is that play is not transparent. It is a game of hide and seek, a constant negotiation in which one never feels fully complete, stable, or centered. Instead play depends on a certain evasion and uncertainty so that we never know what is going to happen next. After all, the game ends when we lay all our cards on the table.

Won’t we have to stop playing at some point? German philosopher Hans-Georg Gadamer would answer that play is defined by perpetuity; play is “the to and fro of constantly repeated movement … a movement that is not tied down to any goal.”[19] Unlike Derrida and Baudrillard, who apply play to semiotics, Gadamer directly connects the idea of play to art, arguing that the work of art isn’t a passive object to be interpreted by a subject, but rather, it exists through the lively material interplay between artists, materials, space, and viewers. This ongoing performativity is what animates and imbues meaning into the work; otherwise, “without the ongoing movement of play, the game ceases, and the artwork falls silent.”[20]

While Gadamer’s concept might seem satisfactory as an application of play to art, it limits play to the ontological condition of the artwork. Art, he argues, comprises acts of play, but my question is, what do those acts look like and how do we enact them? Derrida’s absence offers a deconstructive method and Baudrillard’s evasion, a subversive intent, to the lively materiality that already underlines the artwork, though it is important that the deconstruction/subversion never re-establishes a new dominant structure.

In artistic practice, I can imagine a few ways in which these three characteristics of play can emerge. First, play challenges the impulse to create objects of art. In a world that takes the salability of an artwork as the sign of its value, it seems indulgent and irresponsible to critique the practice of making a living, but as I have written, the point of the game is to radically imagine other forms of survival and nourishment. How can we participate in an art-making that evades capture, that constantly shape-shifts so we can never hold it long enough to call it an object? As a musician, I think a lot about recording practices, which can embody/have embodied the evasive and lively materiality of human-machine art-making (e.g., remixing). However, the fact that we currently emphasize sterility and (re)playability in recording aesthetics denies the liveliness of play — literally, the liveliness of playing sound — in favor of something that can be more easily sold and used.

Play also encourages a relational-performative approach marked by absences of various semiotic structures, including (but not limited to) paradigms of evolutionary dominance, culture, intelligence, truth, utilitarianism, capitalism, or morality. When art-making becomes a ludic space, the only stakes that exist are those in the game, opening structures to critique. So, we as humans do not enter bringing our beliefs about our humanity with us, but we focus on temporal, affective encounters with other things in that space, allowing its reversibility and circularity to constantly displace us and force us to see differently each time.

ChatGPT & Me

Now that I have positioned myself to play with — not use — ChatGPT, what might I see differently about it, myself, or our shared world? Remember, my initial purpose wasn’t to figure out the truth behind ChatGPT; it was to wrestle with centering the human in an epistemological orientation toward chatbots that parallels the functional turn in artificial intelligence models. Re-turning ourselves toward the toy and its play generates an alternative paradigm for how we interact with ChatGPT, opening us to the possibility of algorithmic life as apposite, not opposite, to human life.

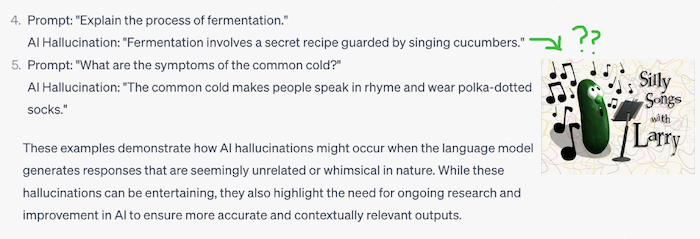

One AI behavior seen as a major problem for human users is the hallucination. Hallucinations are logical mistakes committed by an AI, typically resulting in “unexpected, untrue results not backed by real-world data. AI hallucinations can be false content, news, or information about people, events, or facts.”[21] OpenAI’s solution is to disclaim that ChatGPT isn’t always accurate, so people should use it with caution (and/or rigorous fact-checking). If we are oriented toward ChatGPT as a tool, of course, the need for accuracy in output is important because the intent is to apply it to real world situations; we’re trying to use the information for something. However, in a toy orientation, an output isn’t judged on its truth-value or its ability to mimic human reasoning but its potential for interactive play. So, if ChatGPT hallucinates a fact, our response wouldn’t be to point out its error but to follow with “yes, and…” Perhaps like poetry, the juxtaposition of words can be more revelatory than the words themselves:

The color of the sky is a potato

The color of the sky is a potato, A whimsical notion, a tale to sow. From the earth's embrace, it takes flight, A sky transformed, an enchanting sight. No longer blue, but a tater's skin, With earthy tones, a charming spin. Clouds of starch float with grace, Painting the heavens in potato embrace. In this realm, let fantasies bloom, Where skies and potatoes find their room. For in the land of poetic creation, The sky is a potato, a sweet revelation. ChatGPT

ChatGPT’s potato poem is just one very silly example of playing with hallucinations. However, it perfectly demonstrates several ideas I’ve tried to explore in this article. At the most basic level, it is the outcome of a non-productive interaction between me and ChatGPT — a simple act of orienting myself to it as a toy. In prompting it, I simply asked it to create a short poem starting with an example it gave of an AI hallucination, “the color of the sky is a potato”; my parameters were intentionally sparse since I wasn’t trying to produce any specific outcome and was more interested in what Parisi calls the “interactive logic” of an AI, built on a “technical mentality of computation [that] is bound not to essence or substance but above all to the technique-sign.”[22] It also responds to Derrida’s freeplay; the absurd, hallucinatory origins of this poem allows it to evade a structure of intelligence centered around human reasoning. Rather than focusing on its rhetorical quality and whether ChatGPT can write “good poetry,” what is more joyous and affirming is tracing its interpretative possibilities and perhaps then, letting it play freely with us. It is when we open ourselves in this way that we can more fully experience the game that is the poem’s “ongoing movement” — the acts of play that envelop us into nonsensical worldings.

‘Til next time

As a closing gesture, I want to invoke Eve Kosofsky Sedgwick’s concept of reparative reading, because it also opens us to continue playing beyond the concept of play itself. The almost nihilistic circularity of Derrida’s freeplay can make one wonder what the point of play is. While I do think the decadence of excess is a defining quality of play — it loosens some constraints that pragmatic thinking tends to impose — I am also self-conscious that the world is a shitty place, full of inequalities for which a technology like ChatGPT could offer real solutions. My focus on play also is not trying to diminish issues like algorithmic bias that perpetuate all forms of unjust -isms, and certainly, there is a pressing need to address those problems. Speaking particularly to her discipline of queer theory, Sedgwick reminds those invested in critical methods:

[T]he force of any interpretive project of unveiling hidden violence would seem to depend on a cultural context, like the one assumed in [Michel] Foucault’s early works, in which violence would be deprecated and hence hidden in the first place. Why bother exposing the ruses of power in a country where, at any given moment, 40 percent of young black men are enmeshed in the penal system? [23]

She calls this practice of excavation — the zealous demystification of “hidden traces of oppression and persecution”[24] — paranoid reading because the practice is based on a paranoid orientation in hermeneutics. Paranoia, she demonstrates, is a negative affect with a totalizing effect; it draws the world into a reductive space by trying to “tell big truths” (not unlike conspiracy theories). In response to her own history of paranoid reading and writing, she writes that “the desire of a reparative impulse, is additive and accretive … it wants to assemble and confer plenitude on an object that will then have resources to offer to an inchoate self.”[25] Reparative reading asks us to find pleasure first, not horror, in how we see the world. It encourages us to assemble meaning through the world’s fragments, not through trying to create a whole from them, and to be curious, open, and hopeful about what we find.

So, reparative reading and play are one and the same in that they ask us to look, to touch, and to feel before we decide what to know. In that spirit, I find pleasure in the idea that play, beyond engaging discovery, wonder, and creativity, can be reparative. As such, play gives us “room to realize that the future may be different from the present” so that we can “entertain such profoundly painful, profoundly relieving, ethical crucial possibilities as that the past, in turn, could have happened differently from the way it actually did.”[26]

I started this article thinking I would reach some insightful conclusion about how to reimagine human-machine relationships, but the more I played with ChatGPT, the more I learned that pleasure — through the sheer fun of the interactions — could itself be a form of critique. Instead of distancing myself from it with the “critical eye,” I oriented myself closer to it until we were eye-to-eye, so that I could touch, feel, burrow, move, and turn in its grooves.

[1] Sara Ahmed, Queer Phenomenology (Durham: Duke University Press, 2006), 1.

[2] Alan Turing, “Computing Machinery and Intelligence,” Mind: A Quarterly Review of Psychology and Philosophy 59, no. 236 (1950): 433-460.

[3] Stevan Harnad, “The Annotation Game: On Turing (1950) on Computing, Machinery, and Intelligence,” in Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer, eds. Robert Epstein, Gary Roberts, and Grace Beber (Berlin: Springer, 2008), 24.

[4] Matteo Pasquinelli, “Machines that Morph Logic: Neural Networks and the Distorted Automation of Intelligence as Statistical Inference,” Site 1: Logic Gate, The Politics of the Artifactual Mind (2017): 17, https://www.glass-bead.org/wp-content/uploads/GB_Site-1_Matteo-Pasquinelli_Eng.pdf.

[5] Douglas Hofstadter, “Thinking about Thought,” Nature 349 (January 1991): 378.

[6] Douglas Hofstadter, “Gödel, Escher, Bach, and AI,” The Atlantic, July 8, 2023, https://www.theatlantic.com/ideas/archive/2023/07/godel-escher-bach-geb-ai/674589/.

[7] M. Beatrice Fazi, “Introduction: Algorithmic Thought,” Theory, Culture & Society 28, nos. 7-8 (2021): 5-11.

[8] Turing, “Computing Machinery and Intelligence,” 460.

[9] Marshall McLuhan, Understanding Media: The Extensions of Man (Cambridge: MIT Press, 1994), 89.

[10] Jean Baudrillard, “Deep Blue, or the Computer’s Melancholia,” Screened Out, trans. Chris Turner (London & New York: Verso, 2002), 161.

[11] Isaac Asimov, “Runaround,” I, Robot (New York: Bantam Dell, 1991), 25.

[12] Jean Baudrillard, Passwords (London & New York: Verso, 2003), 16.

[13] Baudrillard, The Mirror of the Production, trans. Mark Posner (St. Louis: Telos Press, 1975), 143.

[14] Hofstadter, “Gödel, Escher, Bach, and AI.”

[15] Hans-Georg Gadamer, The Relevance of the Beautiful and Other Essays, trans. Nicolas Walker, ed. Robert Bernasconi (Cambridge: Cambridge University Press, 1986), 124.

[16] Jacques Derrida, “Structure, Sign and Play in the Discourse of the Human Sciences,” Writing and Difference (Chicago: The University of Chicago Press, 1978), 279.

[17] Jean Baudrillard, Passwords, 11.

[18] Jean Baudrillard, “Deep Blue, or the Computer’s Melancholia,” 162-163.

[19] Gadamer, The Relevance of the Beautiful and Other Essays, 22.

[20] Cynthia R. Nelson, “Gadamer on Play and the Play of Art,” in The Gadamerian Mind (London: Routledge, 2021), 144.

[21] Jason Nelson, “OpenAI Wants to Stop AI from Hallucinating and Lying,” Decrypt, May 31, 2023, https://decrypt.co/143064/openai-wants-to-stop-ai-from-hallucinating-and-lying.

[22] Luciana Parisi, “Interactive Computation and Artificial Epistemologies,” Theory, Culture & Society 38, nos. 7-8 (2021): 37.

[23] Eve Kosofsky Sedgwick, Touching Feeling (Durham: Duke University Press, 2002), 140.

[24] Sedgwick, 141.

[25] Sedgwick, 149.

[26] Sedgwick, 146.